3d Design Jobe Near Spanishfork Utah

Now Let's write the code to implement KNN without using Scikit learn.

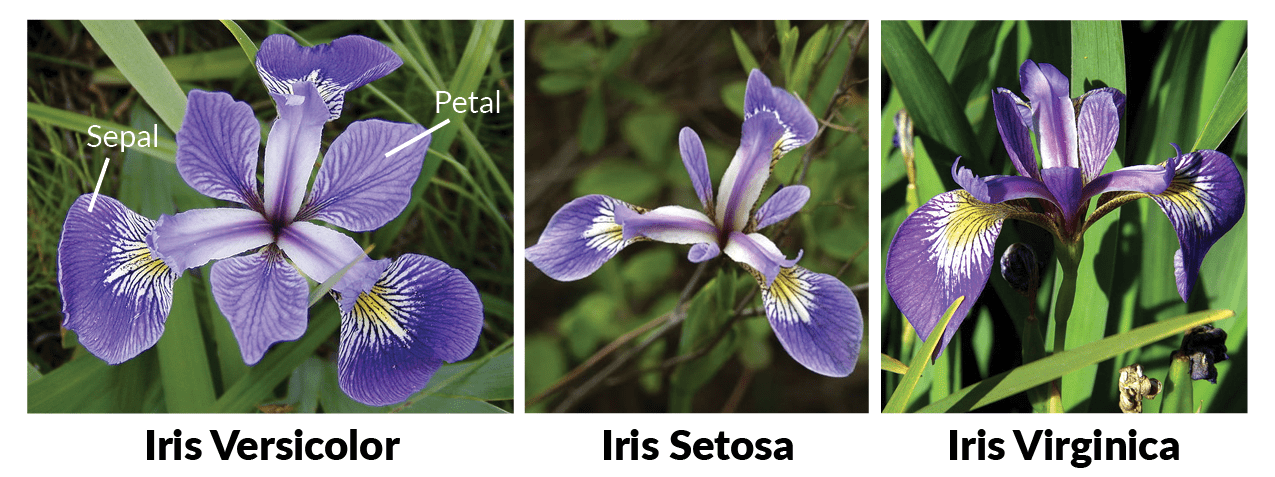

We will be using iris dataset for implementation and prediction. I will assume you know about the iris dataset. You can read about this quite popular dataset here.

Importing essential libraries

import numpy as np

import scipy.spatial

from collections import Counter loading the Iris-Flower dataset from Sklearn

from sklearn import datasets

from sklearn.model_selection import train_test_split iris = datasets.load_iris() X_train, X_test, y_train, y_test = train_test_split(iris.data, iris.target, random_state = 42, test_size = 0.2)

We've made a split, for training our algorithm and then testing it on the test data separately.

We will define a class 'KNN' inside which we will define every essential function that will make our algorithm work. We will be having the following methods inside our class.

- fit : As discussed earlier, it'll just keep the data with itself, since KNN does not perform any explicit training process.

- Distance: We will calculate Euclidean distance here.

- Predict: This is the phase where we will predict the class for our testing instance using the complete training data. We will implement the 3 stepped process discusses above in this method.

- Score: Finally We'll have a score method, to calculate the score for our model based on the test data

- What about 'K' ?: The most important guy here is K, we will pass 'K' as an argument while initializing the object for our KNN class(inside __init__)

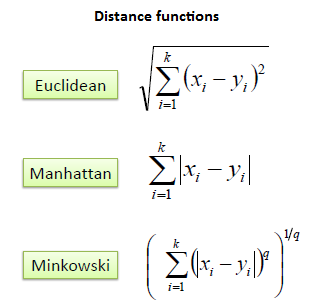

Here is a good picture I got for an idea about various distance metrics used:

Each one of them can be easily calculated using their Numpy inbuild functions or can also be coded directly if you want.

I'll first write the complete class and then we will discuss the flow:

class KNN:

def __init__(self, k):

self.k = k def fit(self, X, y):

self.X_train = X

self.y_train = y

def distance(self, X1, X2):

distance = scipy.spatial.distance.euclidean(X1, X2)

def predict(self, X_test):

final_output = []

for i in range(len(X_test)):

d = []

votes = []

for j in range(len(X_train)):

dist = scipy.spatial.distance.euclidean(X_train[j] , X_test[i])

d.append([dist, j])

d.sort()

d = d[0:self.k]

for d, j in d:

votes.append(y_train[j])

ans = Counter(votes).most_common(1)[0][0]

final_output.append(ans) return final_output

def score(self, X_test, y_test):

predictions = self.predict(X_test)

return (predictions == y_test).sum() / len(y_test)

See what's happening:

We will pass the K while creating an object for the class 'KNN'.

Fit method just takes in the training data, nothing else.

We used scipy.spatial.distance.euclidean for calculating the distance between two points.

Predict method runs a loop for every test data point, each time calculating distance between the test instance and every training instance. It stores distance and index of the training data together in a 2D list. It then sorts that list based on distance and then updates the list keeping only the K shortest distances(along with their indices) in the list.

It then pulls out labels corresponding to those K nearest data points and checks which label has the majority using Counter. That majority label becomes the label of the test data point.

Score method just compares our test output with their actual output to find the accuracy of our prediction.

Kudos! That's it. It's really that simple! Now let's run our model and test our algorithm on the test data we split apart earlier.

clf = KNN(3)

clf.fit(X_train, y_train)

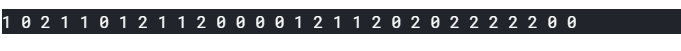

prediction = clf.predict(X_test) for i in prediction:

print(i, end= ' ')

That's our prediction for the test data! We wouldn't mind comparing it with the actual test labels to see how we did. Let's see.

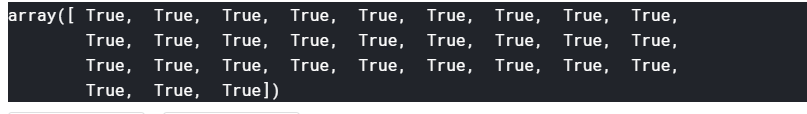

prediction == y_test

So! we are on the right road. Since every prediction is correct we will obviously have a perfect score on our prediction.

clf.score(X_test, y_test)

And we're done! It's really that simple and neat. KNN has a very basic and minimalist approach which is a lot intuitive, yet it is a powerful and versatile machine learning algorithm equipped to solve a wide array of problems.

That's all folks! :)

3d Design Jobe Near Spanishfork Utah

Source: https://medium.com/analytics-vidhya/implementing-k-nearest-neighbours-knn-without-using-scikit-learn-3905b4decc3c

Posted by: merlinawayet1963.blogspot.com

0 Response to "3d Design Jobe Near Spanishfork Utah"

Post a Comment